I tried covering Gen Hoshino’s “Koi” with a Vocaloid.

The 2017 karaoke popularity rankings have been announced.

I was thrilled to see that Gen Hoshino’s “Koi,” one of my favorites, dominated the top spot on both DAM and JOYSOUND.

With its fun, wonderful melody, cheerful dance choreography, and a promotion that emphasized enjoyment—uncommon in recent years—I think it gained support from a wide range of generations.

If more songs like this were released, I think even more people would grow fond of music.

With that in mind, I’m thinking of covering this beloved song myself using a Vocaloid.

First, let's create the backing track.

When you decide to do a cover of a song, the first thing you have to do is recreate the backing track.

If it’s just for your own enjoyment, syncing to the CD audio might be fine, but it becomes an issue if you present it somewhere, so I’ll create it myself.

Fortunately, this song is simple, so I think it’s relatively easy even for beginners at transcribing by ear (I’m not good at it myself).

Since commercially available sheet music is also available, those who aren’t good at picking out notes can obtain materials and practice by inputting them, which makes for good study.

After giving it a quick listen and checking the parts, the structure was as follows.

- drum

- piano

- Strings

- erhu

- xylophone

- base

- Guitar

- Vocal + Chorus

Among these, the bass and drums can be clearly heard, so I’ll make the transcription focusing on the bass.

At first, I roughly captured the bass, strings, and piano sounds to check the outline.

The sound that resembles a chorus in the intro and behind the chorus was actually an erhu.

This was the part that made me go, “I see,” because while I was looking into what the similar sound might be, I found information that an erhu was being used.

But Cubase 9.

Even among the included sound sources for version 5, there was no erhu sound library in the KONTAKT libraries I own.

I wondered if I had to buy it, but after looking into it a bit more, I found an erhu sound in the HALion Sonic SE library, so I used that this time.

Since they’re both Chinese instruments, they produce similar sounds, so it ended up sounding about right.

Surprisingly, the tricky part turned out to be the xylophone sound source.

The xylophone is an instrument that produces sound by striking it with sticks called mallets, and the sound changes quite a lot depending on the type of mallet used.

Orchestral-type sound libraries tend to have softer tones with things like soft mallets, while pop-oriented libraries often have harder, more rigid tones with hard mallets.

I checked the sound sources I have, but I couldn’t quite find anything that fit. However, the xylophone sound included in Xpand!2, which I’d bought on sale, was pretty close, so I went with that.

I’ve been relying on Computer Music Japan, which quickly compiles sale information on gear like these sound sources.

The part itself isn’t doing anything very complicated, so it might be easy to copy, but each note sounds with a strong presence. If the notes you play are too far off, the vibe won’t come through at all, so it was fortunate that we had good sounds to work with.

Since I’m not familiar with guitars, I have to rely entirely on programmed/virtual instruments for the guitar sound. I tried playing it with a rough, estimated sound, but it didn’t resemble it at all.

I consulted someone knowledgeable about guitars and got some hints like, “A humbucker might work,” and “How about a Les Paul?” So I decided to try using JunkGuitar.

JunkGuitar is a domestically made guitar sound library, and it's easy to use even for beginners like me, so I find it very handy.

And another name for JunkGuitar is Vintage Humbucker Guitar.

Thanks to the hints and good audio sources, I somehow managed to pull it together.

Vocaloid programming

Once the instrumental track is finished, we'll create the vocal part.

Since the instrumental production stage already captured the pitches for the lead vocal and chorus, I first had a Vocaloid sing it straight to check what kind of vibe it would have.

This time as well, I’ll have them sing using both IA and IA Rocks.

I tried importing the roughly inputted vocal guide part from the backing track directly into the Vocaloid track and assigning the lyrics, but as expected, the flat, unedited input didn’t capture the right feel.

First, I'll listen to it as-is and note any points of concern, then I'll fix them one by one from there.

- The durations (note lengths) are quite different.

- It has a fine vibrato.

- Not enough accent.

- The voice is thin.

When I create a song with vocals, I take the notes on an alto sax and set the note lengths to tenuto, so I make fine adjustments to the durations.

What unexpectedly caught my attention were the parts where vibrato was applied to short notes like quarter notes.

These kinds of subtle details are time-consuming, but if you leave them out it feels lacking, so I checked while listening to the original track.

Lack of accent and thin vocal tone are challenges that could be called the fate of Vocaloids, but you can bolster accents by using dynamics in the VOCALOID Editor, and it’s also possible to make partial adjustments after exporting to audio.

For the thinness of the voice, I rely on effects that support the vocals.

Fortunately, many plugins nowadays are excellent, so it's become possible to obtain results that more or less match our expectations.

I often use Waves’ SSL Collection and Eddie Kramer’s Signature Series.

I used analog-style harmonic enhancement to make the sound thicker.

Points for revision, etc.

First, regarding note lengths, the parts you sustain and the parts you stop are crucial points in a song’s rhythm.

With a basic input, Vocaloid tends to produce relatively smooth singing (overall a bit legato), but since “Koi” is a rather fast-tempo song, you need to add clear contrasts in dynamics and articulation.

However, songs with fast tempos and densely packed notes are also an area where Vocaloid tends to struggle.

If it’s a genre where chopping notes into isolated attacks isn’t appropriate, the slightly slurred approach can introduce another issue: the tendency of the sound to “flow smoothly into the next note,” which can make it difficult to control pitch as intended.

I ran into this problem again, but there is a solution for it.

By placing a short note with a length mark at the end instead of sustaining a single note, thereby making it two consecutive notes of the same pitch, the first note will retain its pitch regardless of the duration of the following note.

By using this, it became possible to maintain accurate pitch even during fine movements.

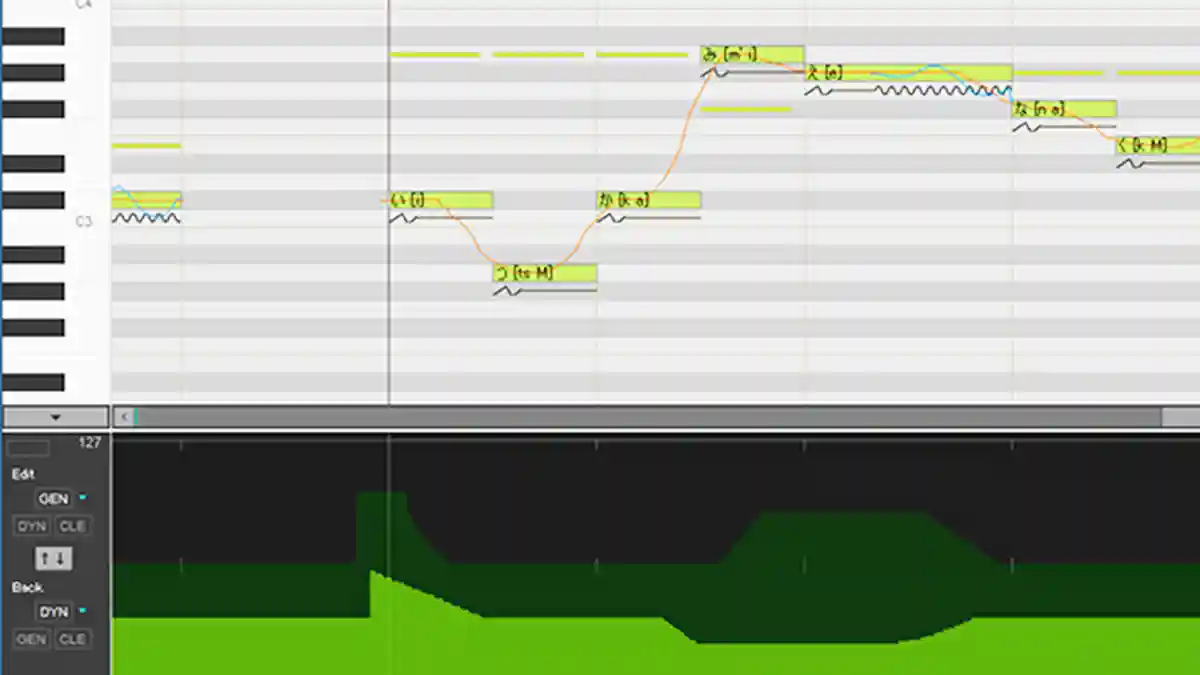

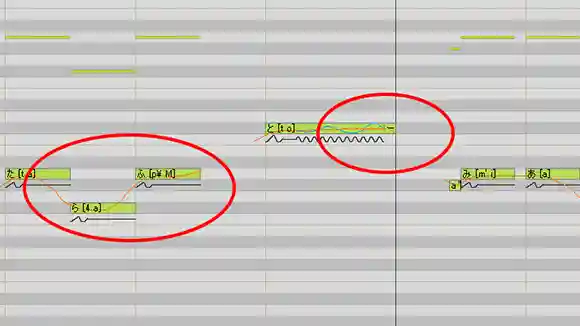

You can see the pitch movement shown by the red lines inside the two circles in the figure.

In the left circle, the red line curves early toward the next note, whereas in the right circle, the line leading to the short note with the tie does not curve.

With adjustments like these, I tried to ensure the pitch movement stays as close as possible.

As for adjusting vibrato, I don’t enter any pitch bends at all.

Since VOCALOID4 Editor for Cubase adds a pitch rendering feature (the red pitch line shown above), I set the vibrato quality in the note properties for notes that need vibrato.

I think you could certainly do the same with handwritten pitches, but with pitch rendering added, you can now visually check the output even when entering values via parameters.

Because of this, we can adjust the settings while checking the results—namely, how much it shakes at each speed—so workability has improved.

There are parts sung in a high pitch with a falsetto voice, but Vocaloids are not good at that kind of head voice either.

This part requires moving multiple parameters at once, making it quite complex.

Put simply, it’s a matter of adjusting parameters like GEN (gender factor), BRI (brightness), OPN (opening), and XSY (cross-synthesis) up or down. However, this varies depending on the type of VOCALOID you use (since each character has different traits, there isn’t a single definitive method). Please consider an approach tailored to the characteristics of the specific VOCALOID you’re using.

Here, we are making major adjustments to GEN and DYN.

It’s not visible, but in fact we are also changing BRI and OPN in addition to this.

If you ask whether it went well, it's hard to say, but I think I managed to get close to the vibe.

Here is the finished audio track.

be/XMznuiT-DcU

It's still hard to call it perfect, but I managed to have the VOCALOID IA sing 'Koi' (Love).

It's really fun to cover your favorite songs.

If you're good at editing Vocaloid, you might be able to make it sing even better, and there are also tools for Vocaloid like 'VocaListener' (Bokarisu) that can generate Vocaloid singing data from recorded human vocals.

You could start with something simple like straight typing at first.

It’ll surely be fun once you gradually get the hang of it and can make it sing various songs.